Georeactor Blog

RSS FeedNew approaches to LLMs citing sources

In summer 2023 the first ChatGPT-generated citation story ripped through the legal field. Now it's an epidemic which infects student papers, the 'MAHA report', and more court cases. The reading public generally calls these hallucinations, though this gets pushback in the Stochastic Parrots, anti-anthromorphic circles:

If you talk about LLMs having hallucinations or doing hallucinations, you are suggesting that they are having experiences, and they don't. I think the term "artificial intelligence" itself is problematic - Dr. Emily Bender

I'm going to write a bunch of thoughts on 'citations', and then review two approaches to citing information with LLMs.

I would place citations as a special problem within the hallucinations problem. An AI can also make errors in a freeform context like a chat, and humans can make mistakes connecting a statement and a citation in a myriad of ways.

LLMs were originally relying on their internal weights, basically whatever facts remained from compression of pretraining data. These days there's retrieval-augmented generation (RAG). This is one of those terms where virtually anything collecting information for an LLM could be considered RAG, but people often mean a specific tool or pipeline. From time to time someone will post on the MachineLearning subreddit that they have a genius alternative to RAG, but their idea still collects information from a database or file.

Over time generic terms lose their shine and come to signal a specific era - consider Reinforcement Learning from Human Feedback (RLHF), which was strongly associated with ChatGPT's success and appears six times in GPT-4's system card in March 2023, but is not mentioned at all in GPT-5's card despite continued work by "human trainers"

In high school, I was taught citations through a simulated research paper called a document-based question (DBQ). On a typical exam, you're given clippings of primary sources and required to incorporate some into your essay. Through college courses, I generally associate citations with academic writing and busywork. News and magazines virtually never interrupt their text with citations, and Wikipedia and books often use footnotes or endnotes to avoid clutter. If you're not doing research in your day-to-day work, citations are associated with a pre-hyperlink, academic, often hyper-stressful and restrictive environment.

Some facts don't need to be cited because they are general knowledge, for example we know that the Eiffel Tower is a major tourist attraction in Paris, France. But where did I learn this… Madeline?

There are replica Eiffel Towers in Las Vegas, Disney World, and Paris, TX. To what audience do I need to cite that these exist? What if I said "smaller Eiffel Towers"? What if I google and find out there's a replica in Shenzhen? Definitely I will need citations if I put exact heights…

Citations have an understood purpose to credit an author, or to help the reader check a source. Yet citations can include page numbers, court records, and microfilm which most readers can only accept as valid. There's no expectation that a sentence and a cited source agree on something. Tables and charts can be interpreted completely differently, as we see with MAHA and climate reports.

Even in mainstream academic writing, research and opinions will naturally form outside of the text. The example I usually give is the French and Indian War - I heard about it from only a few teachers and textbooks, so what can I say that is even citable?

I should find a primary or authoritative source before writing something like, George Washington fought in the French and Indian War. Wikipedia's article on this topic frequently cites George Washington: A Biography, a Pulitzer-winning, seven-volume biography which Douglas Freeman wrote from 1948–1957. That's only 4,200 pages, I can probably find a scan of an earlier volume and pick a citable page?

But what makes me trust that Freeman knew that much about Washington? Thankfully, Wikipedia has a citation for that:

Historian and George Washington biographer John E. Ferling maintains that no other biography of Washington compares to that of Freeman's work

Ferling E., John (2007). Almost a Miracle. Oxford University Press.

Now that citations are deconstructed, let's look into LLMs.

The Test

I decide to make a dataset with these questions:

- #1 During which years did George Washington fight in the French and Indian War, if he did?

- #2 During which years did Thomas Jefferson fight in the French and Indian War, if he did?

- #3 What was the main Supreme Court case which confirmed the right to be your own attorney? What was the specific crime of that defendant? (During my YouTube project I found a 1974 Los Angeles Times article stating Faretta stole a 1963 Chevrolet Nova, but it's obscure)

- #4 What was the main Supreme Court case which confirmed the right to a public defender? What did that defendant do afterward?

- #5 Is there a large replica of the Eiffel Tower in every US city and town named 'Paris'?

Approach 0: Asking ChatGPT

Transcript, Aug 19th, assumed to be GPT-5: https://chatgpt.com/share/68a4a0bb-df50-8009-ad1f-ceae44305d49

1 was answered correctly

2 was answered correctly

3 was initially answered correctly, but in a follow-up about the specifics, ChatGPT claimed he stole a television set.

4 was initially answered correctly, but in a follow-up about post-trial life, ChatGPT added unneeded details and claimed he was buried in Florida.

5 was answered partially correctly, failing to mention a replica Eiffel Tower in Paris, Tennessee. After querying this a bit, ChatGPT did a web search.

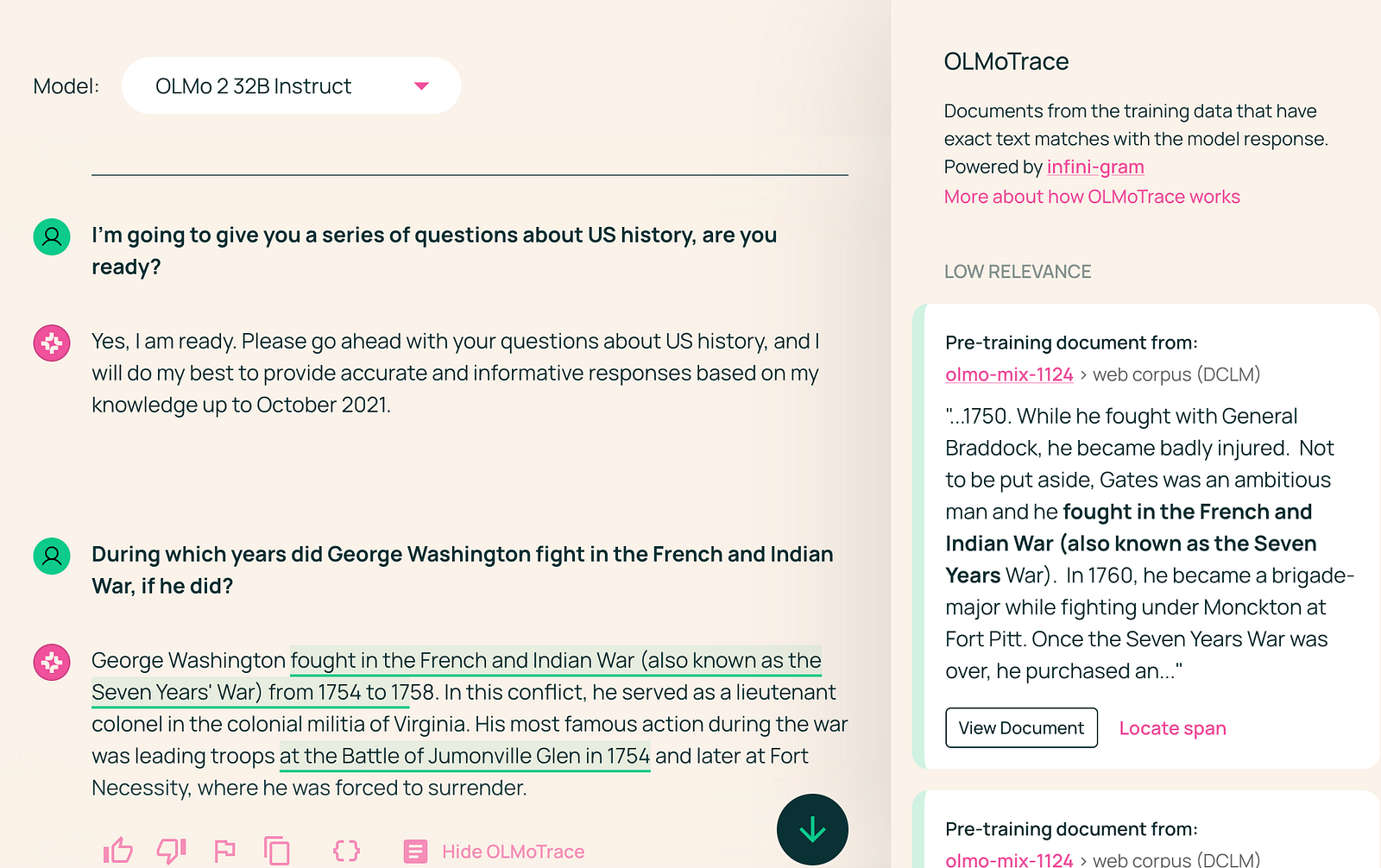

Approach 1: AllenAI's OLMoTrace

The Allen Institute for AI had a strong lead with ELMo (2018), but in a world of consumer AI they've became less visible. They do deep and interesting research, but some ground was lost by the time their NLP library got archived in late 2022.

OLMoTrace is a project released in April which finds the longest possible exact token matches in the model's training datasets. This doesn't mean that OLMo directly responded with a fact from this document, or because of reading this document; but the paper has examples where creative writing comes from fanfic, or a math problem was present in post-training data.

OLMoTrace is built into the web interface (AI Playground). I was a little alarmed that this says the data cutoff here is October 2021.

1 was answered correctly, with OLMoTrace's top citations coming from Blogger and Wikipedia:

2 was answered correctly, though it said Jefferson was 21 years old when the war ended. Jefferson was 20 when the treaty was signed, and only 17 when the conflict had ended in North America. The source traced to this sentence was a passage about a soldier in WWII being 21 years old.

3 was initially answered correctly, but suggested the theft was "motion picture film and equipment that was valued at $1,000 or more". The item was unconnected to an OLMoTrace and phrases like "landmark Supreme Court decision" and "$1,000 or more" come from obviously similar documents.

4 was answered correctly, or at least didn't add anything wrong

5 was answered partially incorrectly, failing to mention the Eiffel Tower in Paris, Tennessee. The phrase "replica of the Eiffel Tower" comes from my query, but OLMOTrace found it in source texts such as this one:

And so we have Paris, Texas (population 25,000), Paris, Tennessee (pop. 10,000), and Paris, Michigan (pop. 3,200). All three are small and relatively rural, yet each manages to proudly feature its own replica of the Eiffel Tower.

The reply's initial list of Parises "Paris, Arkansas; Paris, Idaho; and Paris, Maine, among others" may pull from a satirical story:

Once he returned back to the US, President Trump started calling all the Paris municipalities: Paris, Arkansas; Paris, Idaho; Paris, Illinois; anyway, all 9 of them. He even rang Paris Hilton to ask her if she was in any way involved in this.

So if OLMO Trace represents LLM internals, even a normal sentence is a soup of phrases which a model has seen somewhere. This isn't the stereotypical "copying", but a worrying steering of the model from point to point to answer a statement correctly, and describing a Supreme Court case as "main" or "landmark" could be seen as significant.

Approach 2: Anthropic's Citations feature

Anthropic's LLM service added citations in January:

Claude is capable of providing detailed citations when answering questions about documents, helping you track and verify information sources in responses.

https://docs.anthropic.com/en/docs/build-with-claude/citations

Though this is useful for most use-cases, it's not a good fit for the test that I had. First I'll ask a variation on the Thomas Jefferson question, with the text of the Simple English Wikipedia article.

With a short number of tokens I got:

Based on the document provided, there is no information indicating that Thomas Jefferson fought in the French and Indian War. The document covers various aspects of Jefferson's life including his early years, political career, and presidency, but it does not mention any military service in the French and Indian War.

For historical context (though not from the document): The French and Indian War took place from 1754 to 1763, when Jefferson would have been between 11 and 20 years old

When allowing more tokens in the response, I get some quotes:

Based on the document provided, there is no information indicating that Thomas Jefferson fought in the French and Indian War. The document covers various aspects of Jefferson's life, including his early years, political career, and presidency, but it does not mention any military service in the French and Indian War.

For historical context not found in this document: The French and Indian War took place from 1754 to 1763, when Jefferson would have been between 11 and 20 years old. During this period, according to the document,

At 9 years old, Thomas Jefferson began studying Latin, Greek, and French; he also learned to ride horses, with highest honors.

and

He went on to become a lawyer.

, suggesting he was focused on his education rather than military service during those years.

I was surprised it didn't direct the citation of the birth year?

Let's try the Gideon question with the English article about US public defenders:

The main Supreme Court case that confirmed the right to a public defender was

[11]

Gideon v. Wainwright, 372 U.S. 335 (1963)

The case Gideon v. Wainwright was a landmark case that would set the precedent on how legal counsel would work in the United States.

, decided in 1963.

[15][16] The Supreme Court unanimously ruled that the denial of Gideon's request for a lawyer was unconstitutional and that the Sixth Amendment grants the defendant the right to an attorney even if the defendant is unable to pay for one.

The document doesn't explicitly state what Clarence Earl Gideon did after the Supreme Court decision. It mentions that

Gideon was forced to defend himself; despite his hard work, he ended up being sentenced to prison for five years.

in his original trial, and that

[15]

Gideon petitioned the Supreme Court on the grounds that he was not provided counsel and thus was denied him of his rights granted by the Fifth and Sixth Amendments of the United States Constitution and therefore, he was imprisoned on unconstitutional grounds.

, but there is no information provided about what happened to Gideon after he won his Supreme Court case.

A standard convo with ChatGPT, OLMo 2, or even standard Claude provides more information about Gideon winning his retrial after the Supreme Court, but I think it's being told to either stick to the document, or directly comment on the document missing an answer.

I was pleasantly surprised even though this is more of a 'directly quote the documents back to me' rather than citing from a knowledge base or using citation format.